Barry and Andrew have kindly given me the keys, so there’s likely to be a few more posts around replication and high availability going forward!

The last few years have been a very busy time for us on our replication roadmap. Firstly we released our new offering that used polices to manage replication between systems along with a huge performance improvement (you can read more about that here https://barrywhytestorage.blog/2023/01/24/spectrum-virtualize-policy-based-replication-guest-post-by-chris-bulmer/), encrypted data in flight (EDiF/IPSec) for our native IP replication and a number of smaller items, including a migration from Global Mirror to async policy-based replication. The fun hasn’t stopped yet as we’ve released our next major offering: policy-based high availability (HA) that shipped in the 8.6.1 release in September.

This is based on many of the same concepts as policy-based replication; it uses replication policies to instruct the system to protect volumes using HA, it automatically manages provisioning in both systems and builds on the technical foundation laid by async replication to provide a synchronous, zero RPO/zero RTO, lightning fast HA solution. There’s been significant investment in the performance to make sure you can get the best out of the powerful arrays and nodes that we have available, resulting in very low overheads when enabling HA; in real world terms, this means the maximum throughput has dramatically increased with significantly reduced latency overheads allowing for fraction of a millisecond response times (at negligible distance between systems – distance will increase write response times because physics loves the speed of light).

There’s a new concept being added to support this: Storage Partitions. These act as a container (not a Kubernetes-style container) for a number of volume groups, volumes and hosts, providing a separation of these from other objects in the system so that each partition can have different characteristics applied. Initially we envisioned having HA managed on volume groups by applying HA replication policies to them; but after pondering this for several weeks we kept coming back to the problem of managing hosts and host-volume mappings to ensure that in the event of a problem, hosts would be able to use the HA volumes accessible through the partner system. Having you manage the hosts and host mappings yourself is error prone as it would be easy to forget to create the host in the remote system and all of the host mappings to enable a seamless failover. Storage partitions are part of the solution to this.

As well as adding volume groups to the storage partitions, you also add the hosts. Then you apply HA replication policies to the partition instead of individual volume groups. All of the volume groups and hosts associated with the partition have HA automatically managed by the system ensuring that all of the right configuration is in place to allow a seamless failover. That means that in addition to provisioning the volumes in the remote system, it will create host objects (with the same ports, name and other attributes) and automate the creation and deletion of host-volume mappings (with the same SCSI ID, if it matters). This means that all of the day-to-day activities can be performed from a single GUI or CLI, without the need to switch between systems to manage configuration.

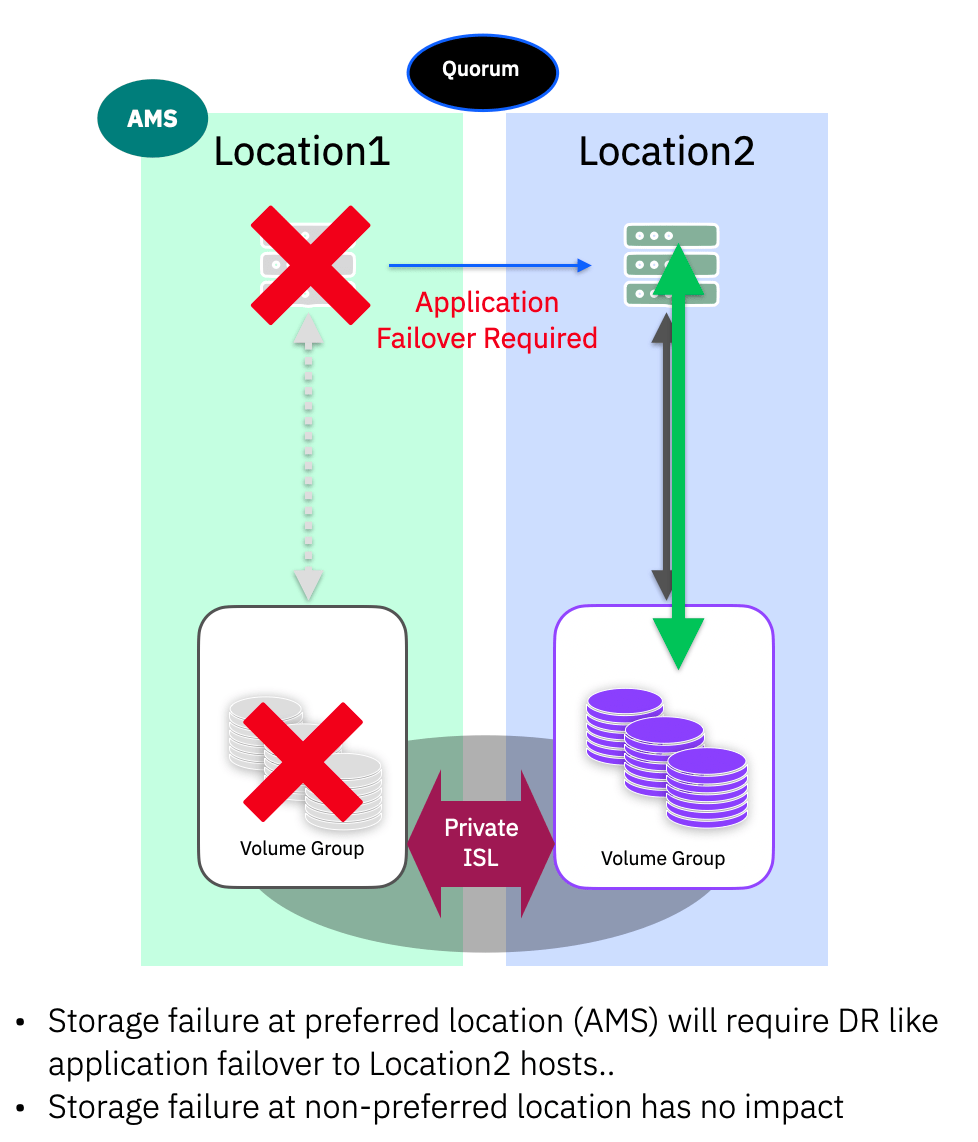

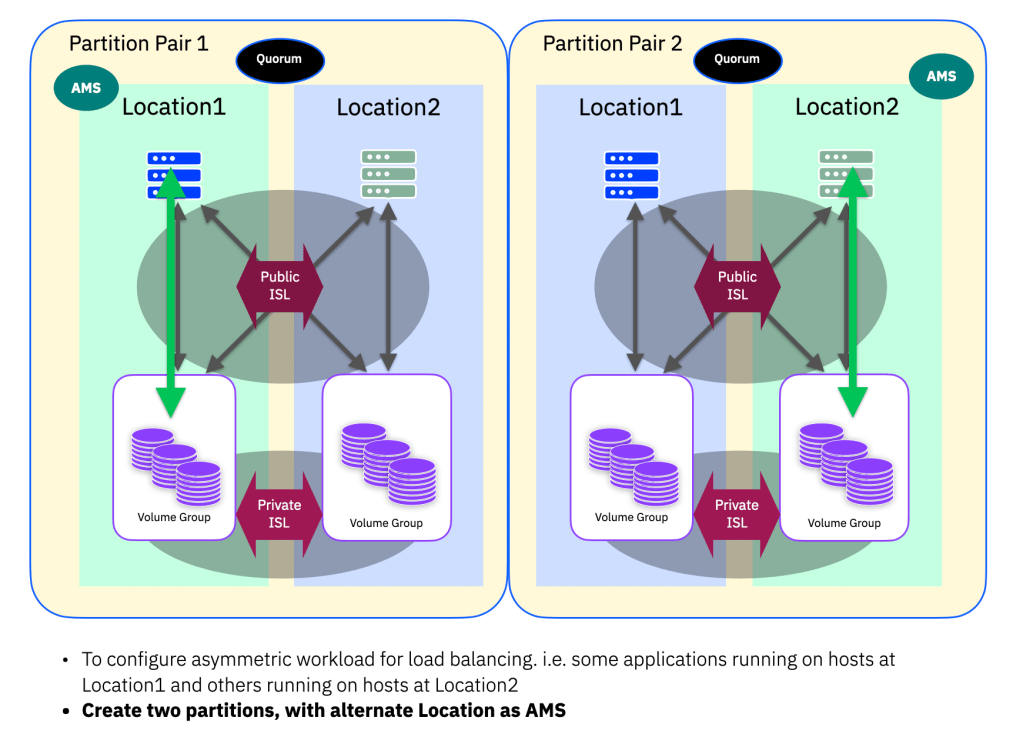

Storage partitions allow for a preference to be expressed of which system should act as the usual (preferred) management system for it and all of the associated volume groups, volumes and hosts. If there’s a disaster and HA has kicked in to ensure volumes are still online, management tasks are still available too but they may be through the other system. This preference also influences the behaviour of a loss of connectivity between systems (split-brain) – if the systems lose connectivity to each other, storage partitions will aim to be accessible from the preferred system. This can be useful for load balancing or for ensuring that during a split-brain scenario that the volumes remain online in the same physical location as the servers that are using them. HA only operates on storage partitions, so any non-HA storage (regardless of whether it’s in a partition without a policy, or not in a partition at all) is unaffected if the systems lose connectivity.

You can also use storage partitions without a replication policy assigned to have non-HA partitions only available through the local system. While the only real benefit of using storage partitions like this today is around partitioning up the system (pun intended) for simplified management and easily adding HA policies later, there’s lots of ideas that we’re looking at with storage partitions in the future. You can continue to use volume groups, volumes and hosts without using storage partitions but they won’t be able to use policy-based HA, but in the event of a split-brain they are completely unaffected (just as storage partitions without HA policies are).

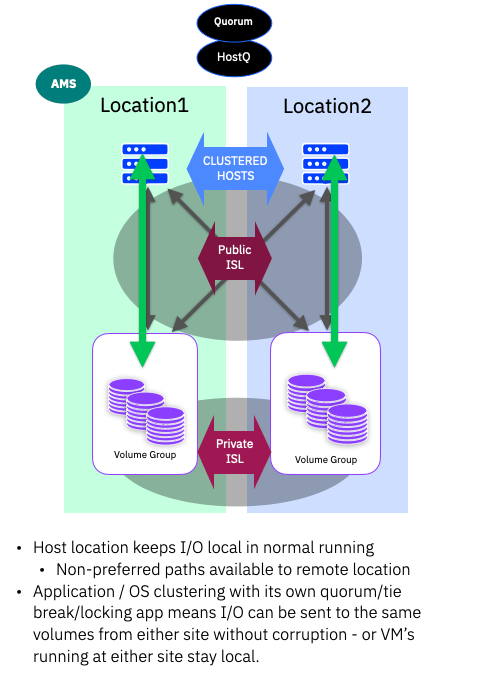

The solution itself is relatively straightforward: two independent FlashSystem or SVC systems, connected together using Fibre Channel (direct attach or fabric attach with/without ISLs) with IP connectivity between management IP addresses and a lightweight Java application running in an independent third site (can be a cloud server) that can act as a tie break during a split brain.

So now there’s options when it comes to implementing high availability with FlashSystem and SVC: there’s the well-established HyperSwap and stretched cluster options, which are still available, and the new policy-based HA solution which provides HA between independent systems (and the performance/simplification advantages covered above). There are a number of restrictions present in the first release, particularly around interoperability and interactions with other features (details of these will be in the documentation) but these are on our backlog to address in the coming releases, so watch this space…

Leave a comment